A list of the most popular models

- Variational Autoencoders (VAE): VAEs are generative models that can be used for unsupervised learning. They use variational inference to learn a latent space representation of the input data.

- Generative Adversarial Networks (GAN): GANs are another type of generative model that consists of a generator and a discriminator. They are trained in a competitive manner to generate realistic samples.

- Pixel CNN: Pixel CNN is a convolutional neural network that models the conditional distribution of each pixel given the previous pixels in an image. It generates images by sampling pixel values sequentially.

- Glow: Glow is a generative flow-based model that uses invertible transformations to model complex data distributions. It can generate high-quality samples and perform density estimation.

- Neural Autoregressive Flows (NAF): NAF is a class of generative models that use invertible transformations combined with autoregressive models. They can model complex distributions and perform efficient sampling.

- Normalizing Flows: Normalizing flows are a family of generative models that transform a simple base distribution into a more complex target distribution using a series of invertible transformations.

- Neural Ordinary Differential Equations (NODE): NODE models use ordinary differential equations (ODEs) to model the dynamics of a system. They can be used for generative modeling by learning the ODE parameters.

- Langevin Dynamics: Langevin dynamics is a stochastic process that can be used for sampling from complex distributions. It is based on the idea of simulating a particle undergoing random motion in a potential field.

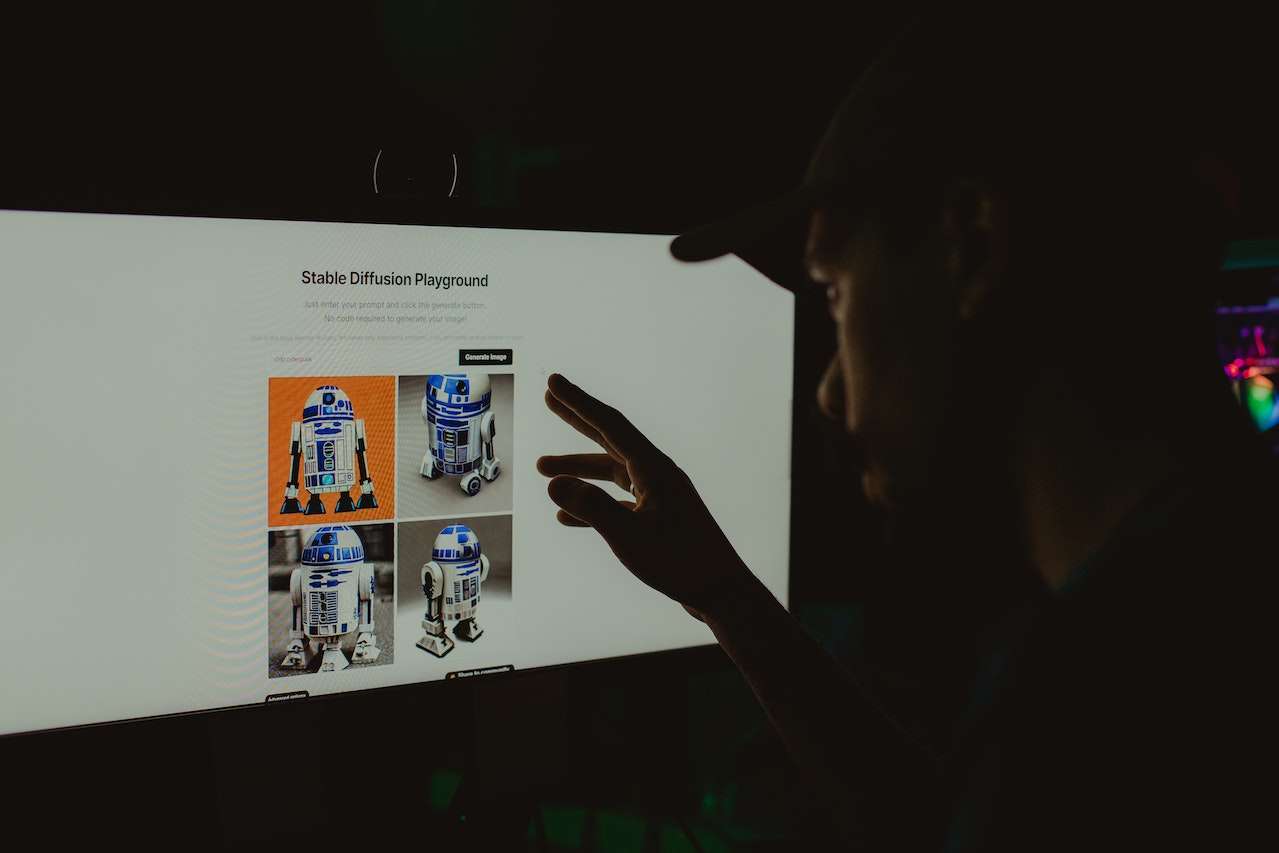

Understanding Stable Diffusion Models: A Powerful Tool for Generative Modeling

Generative models have revolutionized the field of machine learning, allowing us to generate new samples that resemble the training data. Among various generative models, diffusion models have gained considerable attention due to their ability to capture complex data distributions. In this blog, we will explore stable diffusion models, their key concepts, and their applications in generative modeling.

Understanding Diffusion Models: Diffusion models are probabilistic models that iteratively transform a simple distribution into a more complex distribution using a sequence of diffusion steps. The fundamental idea behind diffusion models is to start with a simple distribution (e.g., Gaussian) and gradually introduce dependencies between variables to generate samples that resemble the training data. The diffusion process can be thought of as “smoothing out” the noise in the initial distribution to approximate the target distribution.

The Role of Stability: Stability is a crucial aspect of diffusion models, ensuring that the diffusion process remains tractable and the model can accurately learn the target distribution. Stable diffusion models maintain the stability of the transformations used during the diffusion process, preventing them from diverging or collapsing. This stability enables efficient training and reliable sampling from the learned distribution.

Components of Stable Diffusion Models:

- Diffusion Step: At the core of a stable diffusion model lies the diffusion step, which defines how the variables are transformed at each iteration. Typically, a diffusion step involves applying a diffusion process, such as a Gaussian diffusion, to update the variables.

- Invertible Transformations: Stable diffusion models often employ invertible transformations, which are bijective mappings between input and output spaces. Invertible transformations allow for efficient computation of the probability density function, enabling both forward and inverse transformations.

- Permutation-Invariant Architectures: To ensure stable and consistent learning, permutation-invariant architectures are commonly employed in diffusion models. These architectures leverage symmetries in the data and ensure that the model’s predictions do not depend on the ordering of the variables.

Applications of Stable Diffusion Models:

- Image Generation: Stable diffusion models excel in generating realistic and high-quality images. By training on large datasets, these models can learn the intricate details of the distribution of natural images and produce visually appealing samples.

- Data Augmentation: Stable diffusion models can be used for data augmentation, enhancing the training set by generating additional synthetic samples. This augmentation technique helps to improve the performance and generalization of downstream tasks such as classification or object detection.

- Denoising and Inpainting: By utilizing the invertible nature of the diffusion process, stable diffusion models can be employed for denoising and inpainting tasks. These models can effectively remove noise or fill in missing parts of an image by iteratively refining the estimated distribution.

- Anomaly Detection: Stable diffusion models can be leveraged for anomaly detection by estimating the likelihood of test samples. Samples with low likelihood scores are likely to be outliers or anomalies, aiding in identifying abnormal instances in various domains.

Stable diffusion models have emerged as a powerful tool in the realm of generative modeling. Through the diffusion process and the use of invertible transformations, these models can learn complex data distributions, generate high-quality samples, and perform tasks such as denoising, inpainting, and anomaly detection. As research in the field progresses, stable diffusion models continue to evolve, opening new avenues for creative applications and advancements in machine learning.

0 Comments